Analyzing Efforts to Rein in Misinformation on Social Media

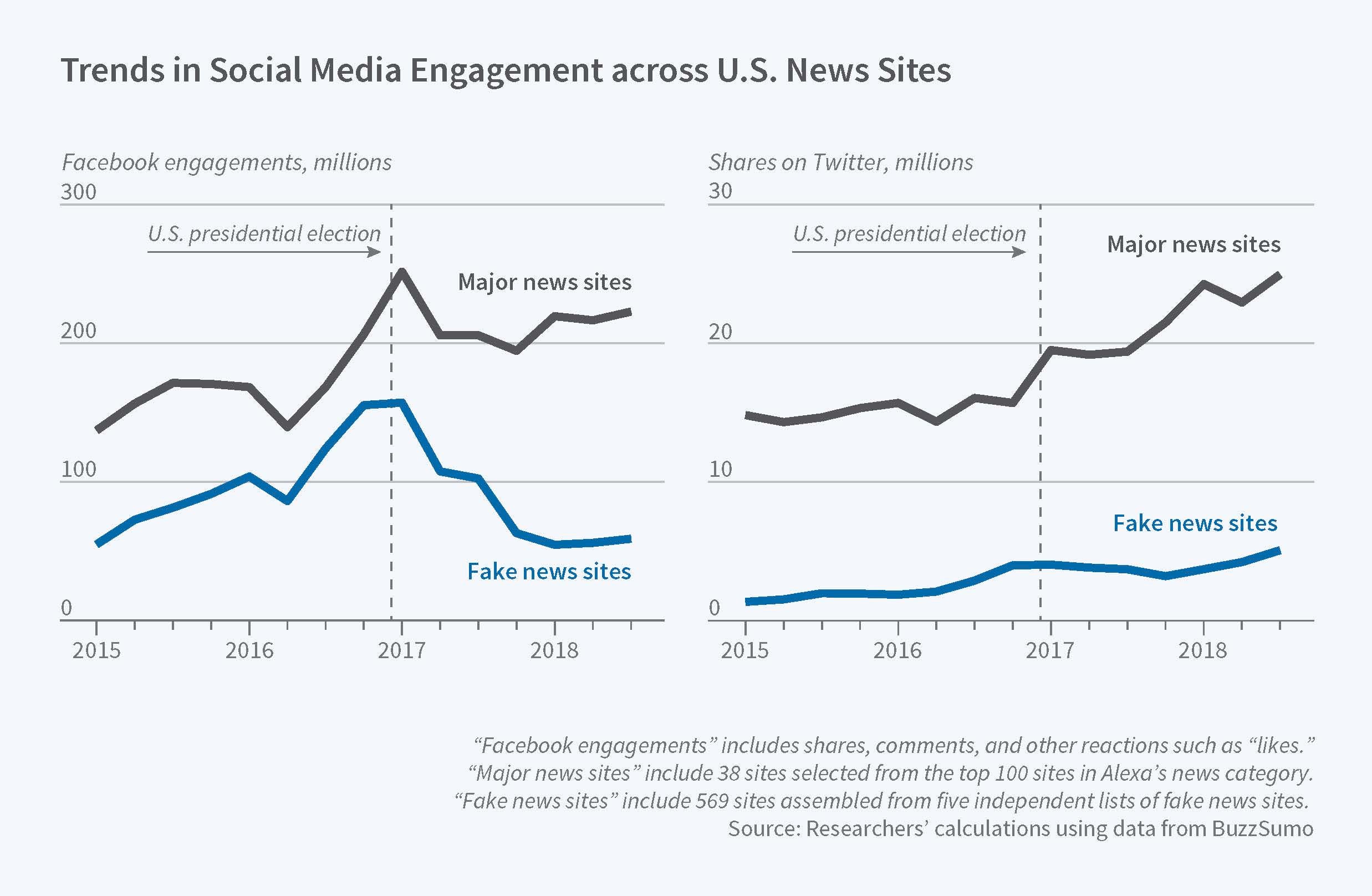

Interactions with fake news sites by users of both Facebook and Twitter rose steadily in 2015 and 2016, but have fallen more than 60% since then on Facebook.

Concern about the societal implications of the rise of fake news sites on social media platforms has led to calls for a variety of public policy actions, as well as internal changes in some platforms. In Trends in the Diffusion of Misinformation on Social Media (NBER Working Paper No. 25500), Hunt Allcott, Matthew Gentzkow, and Chuan Yu provide new information on the trends in social media users' interactions with fake content.

The researchers compiled a list of 569 websites recognized as purveyors of misinformation in two academic studies and in articles by PolitiFact, FactCheck.org, and BuzzFeed. They found a steady rise in interactions with fake news sites by users of both Facebook and Twitter from January 2015 to the end of 2016.

Around the time of the 2016 election, the researchers find, fake news sites received about two-thirds as many Facebook engagements as 38 major news sites in their sample. At about that time, Facebook initiated a series of protocols that were designed to root out misinformation. These included expanded fact-checking, flagging some content as "Disputed," and increased promotion of "Related Content" to provide context for individual news items. These changes appear to have mattered: Between late 2016 and mid-2018, interactions with fake news sites fell by more than 60 percent on Facebook, but continued to increase on Twitter. The researchers compute the ratio of Facebook engagements — shares, comments, and reactions such as "likes" — to shares of content on Twitter. The ratio of Facebook engagements to Twitter shares of fake news content was steady at about 45:1 during 2015 and 2016, but declined to 15:1 by mid-2018.

The decline in Facebook engagements notwithstanding, the level of interaction with fake news sites remains high. At the end of the study period, there were 60 million Facebook engagements per month with fake news sites, down from a peak of 160 million per month in late 2016. That compares with 200-250 million Facebook engagements per month in the sample of 38 major news sites, which includes The New York Times, The Wall Street Journal, CNN, and Fox News. Twitter shares of false content ranged from 3 million to 5 million per month in the period between the end of 2016 and July 2018, compared with a steady rate of 20 million per month for the 38 major news sites combined.

To develop a benchmark for activity on Facebook vis-a-vis activity on Twitter, the researchers considered engagements and shares associated with these 38 news sites, 78 smaller news providers, and 54 business and culture sites. For these sites collectively, the Facebook-to-Twitter ratio remained stable over the study period.

In another test, the researchers collected a sample of 9,540 false articles that appeared during the study period. They found that the ratio of Facebook engagements to Twitter shares declined by half or more after the 2016 election, bolstering the website-level findings.

The researchers conclude that "some factor has slowed the relative diffusion of misinformation on Facebook" during their sample period, but they cannot identify a specific cause of this change. They also raise cautions about their findings. For example, they note that PolitiFact and FactCheck.org, two sources they used to identify fake news sites, work with Facebook to weed out fake news stories. That could imply that the fake news website sample is weighted toward sites that Facebook would take action against. The researchers also note that while they have analyzed the major providers of false stories, web domains that are small or that were only briefly active may have escaped their analysis. Other sites may have found ways to evade detection, such as by changing domain names.

— Steve Maas